Patriotism and Publication

Jeremi details his recent publication in Towards Data Science; Luca discusses learnings from a podcast on a16z's American Dynamism practice

Welcome back to Jeremi and Luca’s Newsletter, a weekly update brought to you by two friends on opposite ends of the country, connected by a relentless desire to learn.

The topics may seem random and disjointed (they are), but would it be fun otherwise? Either way, we promise each issue will be filled with insights, learnings, and updates, in what we hope is a good way to stay connected to friends and family.

In this second installment, enjoy insights from Luca’s podcast learnings and the story behind Jeremi’s article publication.

Luca: American Dynamism

In early May, I was perusing The Wall Street Journal when I came across an article titled “The Smartest People in the Room Are All Listening to the Same Podcast.”

I’ll be the first to tell you that I was not in that room. So, I immediately clicked into what would be my first introduction to Acquired, a podcast focusing on business history, growth, and strategy.

This week, I particularly enjoyed listening to the “Investing in American Dynamism (with Katherine Boyle)” episode. Boyle, a General Partner at Andreessen Horowitz, invests in companies that support the national interest, often in sectors like defense, aerospace, education, healthcare, and transportation.

Sounds straightforward to invest in your country, right? Well, not quite—the American Dynamism fund only launched in 2023.

This seems to be primarily due to regulatory capture; it’s much easier to innovate in untouched areas rather than in long-standing industries that are heavily controlled by their largest players (think defense). This subsequently makes it “a lot easier to innovate in the virtual world than in the physical” (Boyle), causing infrastructure and other more “material” industries to fall behind and lack investment.

That point, along with the fact that the cost of creating a startup is continually decreasing, means that people who would have gone to government 20 years ago to fix healthcare (for example) are now able to build their own solutions and have the same (or outsized) impact. And when we think about why the U.S. is so behind in healthcare, we can quickly point to the lack of technology.

“Everything is less expensive in consumer land except for healthcare, except for housing, and except for education. It's really because technology has not touched those sectors, and will not touch those sectors unless we do something about it.”

Hence, the American Dynamism fund: an injection of capital into Silicon Valley tech projects that are solving national problems at a faster rate than policy ever has or will.

Artificial intelligence will undoubtedly accelerate this whole process, bringing technological solutions to industries that have been largely ignored. And, at a higher level, as Silicon Valley continues to collaborate with Washington, as the “culture and the counterculture merge” (Boyle), America will experience dynamism.

Two years ago, the CIA named its first Chief Technology Officer, a Silicon Valley “mascot” of sorts with considerable private sector and startup experience. What better signal of what lies ahead for our country?

Jeremi: A (small) Triumph

A few days ago, something really cool happened. I had an article published by Towards Data Science (TDS).

This might seem a little silly—TDS is just a publication on Medium, which itself is just a social publishing platform. But it was important to me for two reasons:

I “grew up” on TDS. Which is to say, since I started learning about Machine Learning in 2021, I have always found the articles from TDS to be the highest quality and the ones that brought me the most learning1. It’s a full circle moment to now be published by them.

This felt like validation for the decision I made a few months ago; to shift gears and learn about Natural Language Processing.

Natural Language Processing (NLP) is the field of Machine Learning that ChatGPT and other Large Language Models (LLMs) derive from—computational models operating on text. Before, I had mostly focused on the field of Reinforcement Learning (a different area in Machine Learning).

So am I a sellout? Jumping ship and going to NLP because that’s what’s in vogue and what everyone is talking about?

Kind of.

I think Reinforcement Learning will have its moment. I do. But I cannot ignore the massive leaps that are happening in NLP. As childish as it sounds, I don’t want to watch as this wave passes me by.

So when I had the opportunity to join a reading group on Natural Language Processing, I took it. Even if it felt like a betrayal, I wanted to understand why NLP had been so successful.

As we read research papers, reproduced results, and discussed, I came to the obvious realization: hey, this is really cool. The model architecture is elegant. The theories about how ChatGPT represents meaningful concepts are striking.

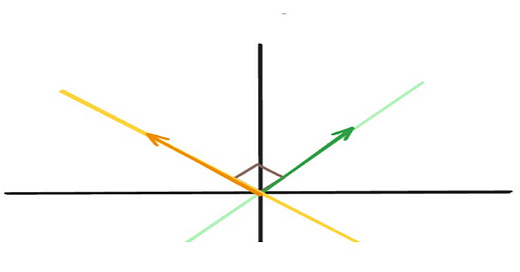

It was cool enough that I thought, maybe I should write about this. Hence, I wrote two articles. One is a long, dense guide to Transformers (the model architecture behind ChatGPT). The other is an explanation of how ChatGPT represents concepts as numbers.

On a whim, I decided to submit the second one to Towards Data Science. I’ve submitted articles before and been rejected. But this time around, I had a feeling that I had something interesting to say, more than I ever had before. And this time around, TDS agreed with me.

“Fruits of your labor,” I guess.

To give an example of a great TDS article: this article by Adrien Lucas Ecoffet was the best explanation of Policy Gradients I have ever encountered. I read it almost two years ago, but I’ve never forgotten it.